John Cho, Tria’s Chief Technology Officer, examines the impact of emerging technologies through his unique perspective. In this blog, John answers the question “what’s my jam?”, breaking down how music streaming services use artificial intelligence (AI) to determine users’ music tastes.

When you hear the terms machine learning and artificial intelligence (AI), you may think of a generative AI platform like ChatGPT, which burst into mainstream consciousness following its 2022 launch. While that may have been the first time that broader society started paying serious attention to AI – and more specifically, machine learning – both have existed for decades. The proliferation of mobile applications has made machine learning so ubiquitous that you may not think about all the ways it touches your daily life.

Let’s walk through one everyday example: streaming music.

Whether you prefer hip hop, show tunes or jazz, streaming services have one thing in common: they use AI to determine: “What’s my jam?”

It’s not magic or some inscrutable science at work in these apps; it’s a machine learning algorithm that statistically analyzes a user’s musical preferences based on their choices and listening history to predict the next song they’ll likely want to hear. Whether you use Pandora, Spotify, or Prime Music, you may have wondered: “How do they know my music tastes so well?”

Here’s an outsider’s simplified view of how streaming music services work:

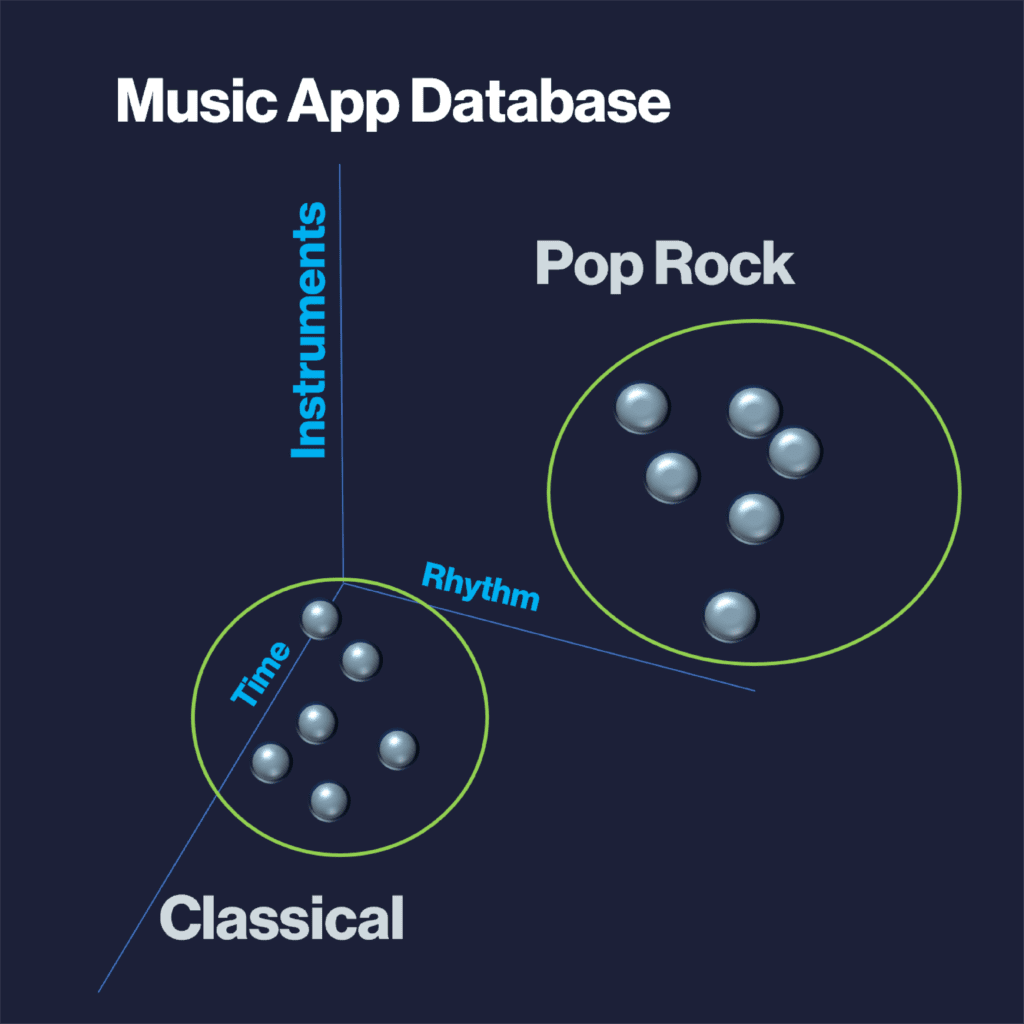

First, the streaming service behind the app decomposes songs you listen to into different data attributes – for instance, rhythm and time. If the song features a voice, is the vocal style operatic, pop, or rap? What kind of instruments are in there? Is it electric guitars, or mainly horns and violins?

Once the app collects these and many other attributes about each song, it groups together the music you’ve listened to with music in its database containing similar attributes. The app can use the attributes to classify the music by genre: a classical piece might have sweeping violins and horns, not drum kits and electric guitars. A pop song may consist of certain types of rock beats, vocals, and melodies.

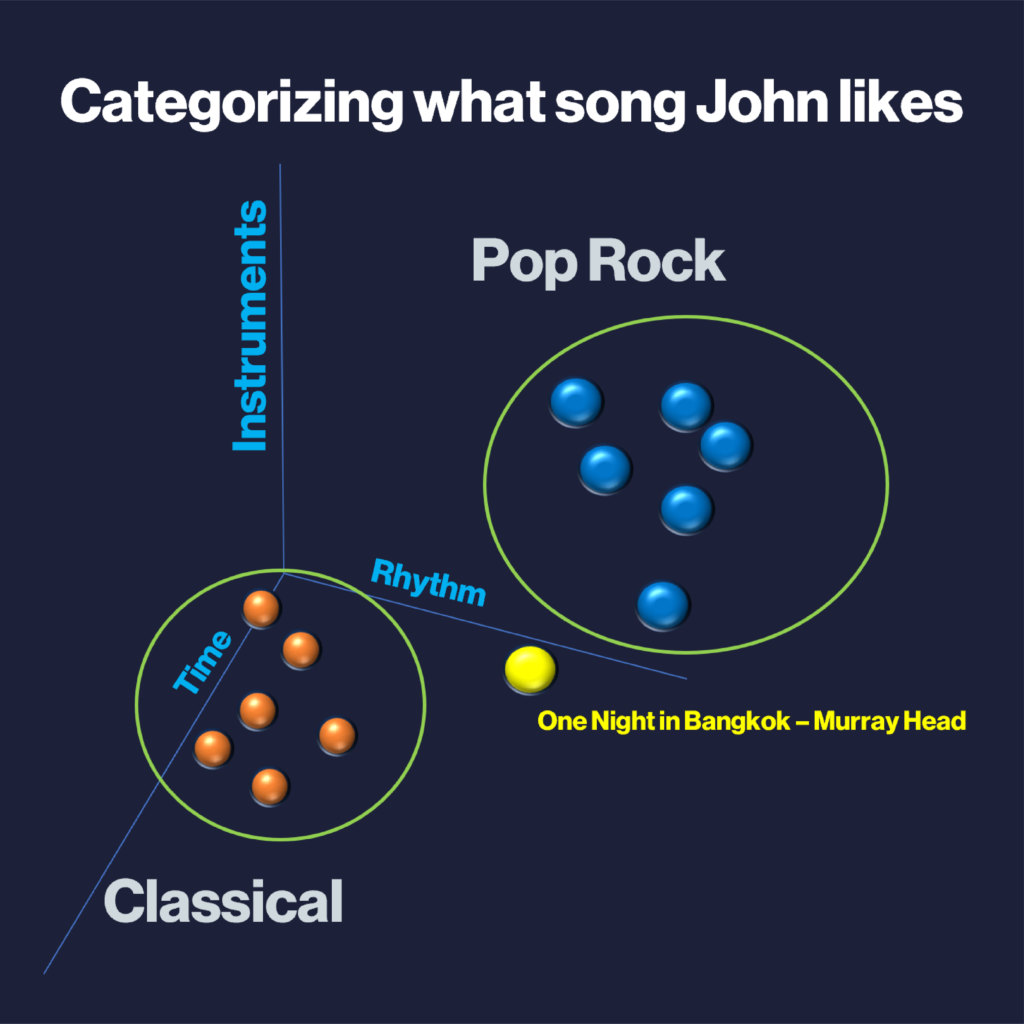

While many of today’s streaming services have developed their own proprietary take on predicting what kinds of music you want to listen to, a simple machine learning algorithm called K-Nearest Neighbors (KNN) has been foundational in the art of predicting music a user might want to hear. Believe it or not, KNN originated in 1951. Early efforts by streaming services used variations of this algorithm to evaluate the song you are listening to and compare it to different genres. KNN provides a relative “distance” or association between the song being played and the various genres of music.

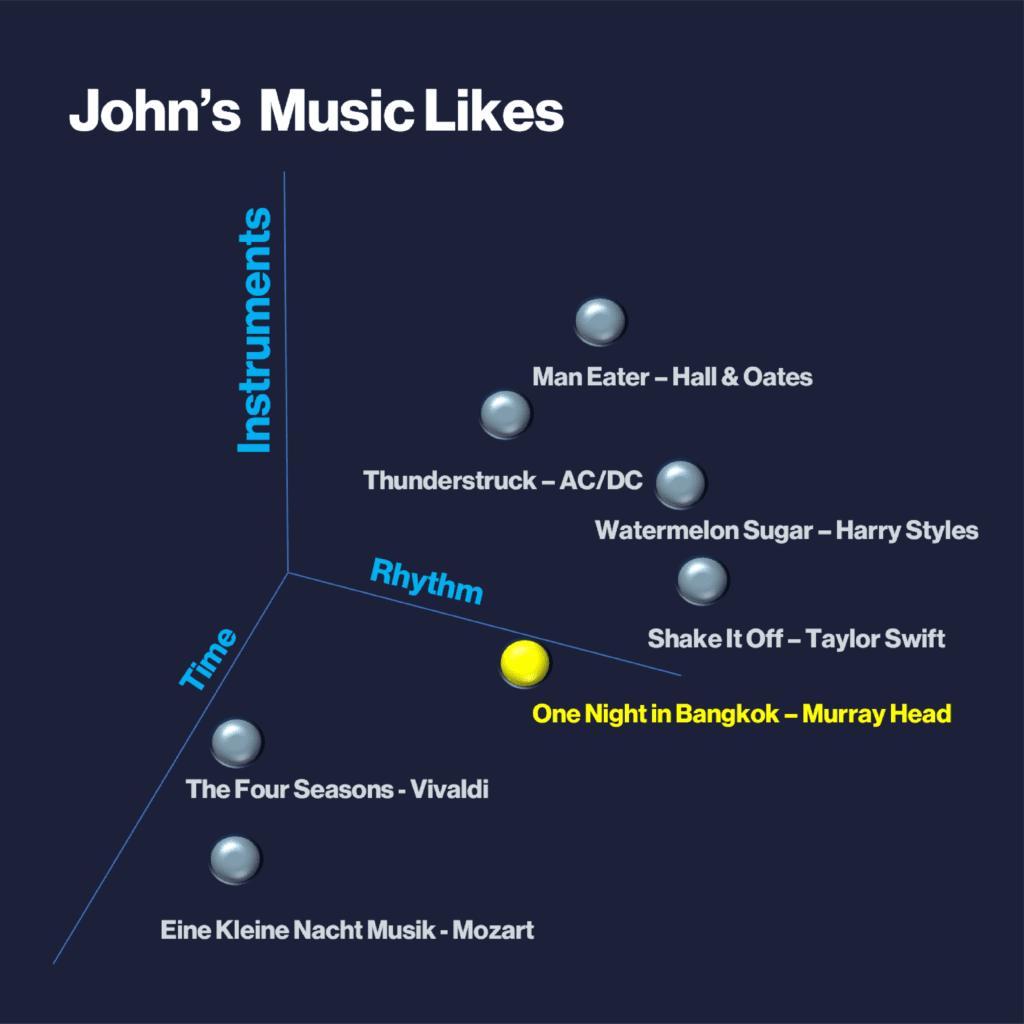

Let’s say you spend 45 minutes listening to everything from “Thunderstruck” by AC/DC to “The Four Seasons” by Vivaldi. KNN can associate attributes of each song to understand and analyze the music you listen to the most. To put it in simpler terms, if you’ve listened to five hard rock songs and two classical songs, the algorithm will statistically suggest more hard rock songs and perhaps include an occasional classical piece as well.

Further, you can enrich the data the app is collecting by giving a song a “thumbs up” or “thumbs down.” This enables the music streaming service to tabulate and statistically suggest the kinds of music you likely want to hear next with more confidence.

And it doesn’t stop there. What if the streaming service considered the time of day you listen to music, and maybe the weather or your location while listening? Streaming apps can use these factors and others to influence your choices.

Now that I’ve demystified AI by examining its impact on our daily lives, in my next blog I will explore how AI can help predict potential fraud, waste, and abuse before it happens.

For more wisdom from John, check out his previous blog post: Five Things That AI is Not.